In this post , I'm sharing interview questions-answers at Principal Architect level at Aristocrat.

Kindly go through my other interview posts:

- Java Technical Architect Interview @ Xebia

- Java Interview @ IndieGames

- Java Interview @ Fresher Level - 1

- Java Interview @ Fresher Level - 2

- Docker Interview questions

- Interview @ Xebia : Round - 2

- Java Techincal interview @ IBM

- Java Interview @ Polaris

- Java Technical Architect interview : Round-2

- Code Review Practices

- Multithreading interview in Ericsson

- Java Interview @ Altran

- Java Technical Lead interview @ Accenture

- Java Interview @ 1mg

- Java Technical Architect interview @ Tech Mahindra

- Microservices interview 4 : circuit breaker

Question 1:

What is Apache Kafka? When it is used? What is the use of Producer, Consumer, Partition, Topic, Broker and Zookeeper while using Kafka?

Answer:

Apache Kafka is a distributed publish-subscribe messaging system.

It is used for real-time streams of data and used to collect big data for real-time analysis.

Topic: A topic is a category or feed or named stream to which records are published. Kafka stores topic in logs file.

Broker: A kafka cluster is a set of servers , each of which is called a broker.

Partition: Topics are broken up into ordered commit logs called partitions. Kafka spreads those log's partitions across multiple servers or disks.

Producer: A producer can be any application who can publish messages to a topic. The producer does not care what partition a specific message is written to and will balance messages over every partition of a topic evenly.

Directing messages to a partition is done using message key and a partitioner. Partitioner will generate a hash of the key and map it to a partition.

Producer publishes a message in the form of key-value pair.

Consumer: A consumer can be any application that subscribes to a topic and consume the messages.

A consumer can subscribe to one or more topics and reads the messages sequentially.

The consumer keeps track of the messages it has consumed by keeping track on the offset of messages.

Zookeeper: This is used for managing and coordinating kafka broker.

Question 2:

What are the Kafka features?

Answer:

Kafka features are listed below:

- High throughput : Provides support for hundreds of thousands of messages with modest hardware.

- Scalability : Highly scalable distributed systems with no downtime.

- Data loss : Kafka ensures no data loss once configured properly.

- Stream Processing : Kafka can be used along with real time streaming applications like Spark and Storm.

- Durability : Provides support for persisting messages to disk.

- Replication : Messages can be replicated across clusters, which supports multiple subscribers.

Question 3:

What are Kafka components?

Answer:

Kafka components are:

- Topic

- Partition

- Producer

- Consumer

- Messages

A topic is a named category or feed name to which records are published. Topics are broken up into ordered commit logs called partitions.

Each message in a partition is assigned a sequential id called an offset.

Data in a topic is retained for a configurable period of time.

Writes to a partition is generally sequential , thereby reducing the number of hard disk seeks.

Reading messages can either be from beginning and also can rewind or skip to any point in partition by giving an offset value.

A partition is an actual storage unit of kafka messages which can be assumed as a kafka message queue. The number of partitions per topic are configurable while creating it.

Messages in a partition are segregated into multiple segments to ease finding a message by its offset.

The default size of a segment is very high, i.e. 1GB, which can be configured.

Each segment is composed of the following files:

- Log: Messages are stored in this file.

- Index: stores message offset and its starting position in the log file.

- TimeIndex

Question 4:

What is a Kafka cluster?

Answer:

Kafka cluster is a set of servers and each server is called a broker.

In the above diagram, Kafka cluster is a set of servers which are shown with Broker name. Producers publish messages to topics in these brokers and Consumers subscribe to these topics and consume messages.

Zookeeper is used to manage the coordination among kafka servers.

Question 5:

What problem does Kafka resolve?

Answer:

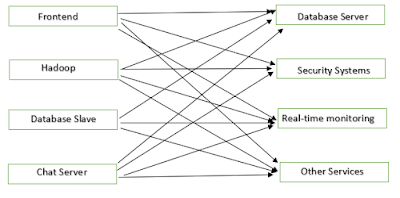

Without any messaging queue implementation, what the communication between client nodes and server nodes look alike is shown below:

There is a large numbers of data pipelines which are used for communication. It is very difficult to update this system or add another node.

If we use Kafka, then the entire system will look like something:

So, all the client servers will send messages to topics in Kafka and all backend servers will consume messages from kafka topics.

That's all for this post.

Thanks for reading!!

No comments:

Post a Comment